Unusual Article Uncovers The Deceptive Practices Of Deepseek Chatgpt

페이지 정보

본문

During inference, we employed the self-refinement approach (which is one other broadly adopted method proposed by CMU!), providing suggestions to the coverage model on the execution results of the generated program (e.g., invalid output, execution failure) and allowing the mannequin to refine the answer accordingly. To harness the advantages of each methods, we carried out the program-Aided Language Models (PAL) or extra precisely Tool-Augmented Reasoning (ToRA) strategy, initially proposed by CMU & Microsoft. Natural language excels in abstract reasoning however falls quick in exact computation, symbolic manipulation, and algorithmic processing. We famous that LLMs can perform mathematical reasoning utilizing each text and programs. In both textual content and image era, we've seen great step-operate like enhancements in model capabilities across the board. While we've got seen attempts to introduce new architectures reminiscent of Mamba and extra just lately xLSTM to just identify just a few, it seems possible that the decoder-only transformer is right here to remain - no less than for the most half. While a lot of the progress has happened behind closed doorways in frontier labs, we have seen a number of effort within the open to replicate these results. I've 2 causes for this speculation. Cochrane: There’s a few reasons.

During inference, we employed the self-refinement approach (which is one other broadly adopted method proposed by CMU!), providing suggestions to the coverage model on the execution results of the generated program (e.g., invalid output, execution failure) and allowing the mannequin to refine the answer accordingly. To harness the advantages of each methods, we carried out the program-Aided Language Models (PAL) or extra precisely Tool-Augmented Reasoning (ToRA) strategy, initially proposed by CMU & Microsoft. Natural language excels in abstract reasoning however falls quick in exact computation, symbolic manipulation, and algorithmic processing. We famous that LLMs can perform mathematical reasoning utilizing each text and programs. In both textual content and image era, we've seen great step-operate like enhancements in model capabilities across the board. While we've got seen attempts to introduce new architectures reminiscent of Mamba and extra just lately xLSTM to just identify just a few, it seems possible that the decoder-only transformer is right here to remain - no less than for the most half. While a lot of the progress has happened behind closed doorways in frontier labs, we have seen a number of effort within the open to replicate these results. I've 2 causes for this speculation. Cochrane: There’s a few reasons.

It’s notoriously difficult as a result of there’s no common method to use; fixing it requires inventive pondering to take advantage of the problem’s structure. It requires the mannequin to understand geometric objects based mostly on textual descriptions and carry out symbolic computations utilizing the distance formulation and Vieta’s formulas. Inference requires vital numbers of Nvidia GPUs and high-performance networking. Each of the three-digits numbers to is colored blue or yellow in such a method that the sum of any two (not necessarily totally different) yellow numbers is equal to a blue quantity. What is the sum of the squares of the distances from and to the origin? Still, there's a sense that we will be bowled over by one thing even bigger. Large Language Models are undoubtedly the most important part of the current AI wave and is at present the area where most analysis and investment is going towards. Much about DeepSeek has perplexed analysts poring by way of the startup’s public research papers about its new mannequin, R1, and its precursors. Our ultimate solutions were derived through a weighted majority voting system, which consists of producing a number of options with a coverage model, assigning a weight to every solution utilizing a reward model, after which selecting the answer with the highest complete weight.

It’s notoriously difficult as a result of there’s no common method to use; fixing it requires inventive pondering to take advantage of the problem’s structure. It requires the mannequin to understand geometric objects based mostly on textual descriptions and carry out symbolic computations utilizing the distance formulation and Vieta’s formulas. Inference requires vital numbers of Nvidia GPUs and high-performance networking. Each of the three-digits numbers to is colored blue or yellow in such a method that the sum of any two (not necessarily totally different) yellow numbers is equal to a blue quantity. What is the sum of the squares of the distances from and to the origin? Still, there's a sense that we will be bowled over by one thing even bigger. Large Language Models are undoubtedly the most important part of the current AI wave and is at present the area where most analysis and investment is going towards. Much about DeepSeek has perplexed analysts poring by way of the startup’s public research papers about its new mannequin, R1, and its precursors. Our ultimate solutions were derived through a weighted majority voting system, which consists of producing a number of options with a coverage model, assigning a weight to every solution utilizing a reward model, after which selecting the answer with the highest complete weight.

Specifically, we paired a policy model-designed to generate problem solutions in the form of pc code-with a reward mannequin-which scored the outputs of the policy model. Earlier this week, DeepSeek, a well-funded Chinese AI lab, released an "open" AI mannequin that beats many rivals on fashionable benchmarks. DeepSeek is shaking up the AI industry with cost-efficient massive language fashions it claims can carry out just as well as rivals from giants like OpenAI and Meta. The researchers say they use already current know-how, as well as open source code - software program that can be utilized, modified or distributed by anyone free of cost. Attracting attention from world-class mathematicians as well as machine studying researchers, the AIMO sets a new benchmark for excellence in the field. Specifically, DeepSeek introduced Multi Latent Attention designed for environment friendly inference with KV-cache compression. AIMO has introduced a series of progress prizes. The advisory committee of AIMO contains Timothy Gowers and Terence Tao, both winners of the Fields Medal. Dense transformers across the labs have in my view, converged to what I call the Noam Transformer (due to Noam Shazeer). A 12 months that started with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of several labs which might be all trying to push the frontier from xAI to Chinese labs like DeepSeek and Qwen.

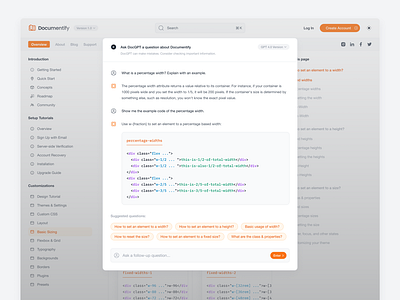

It offers strong assist for varied Large Language Model (LLM) runners, including Ollama and OpenAI-appropriate APIs. DeepSeek's AI models can be found via its official web site, the place customers can access the DeepSeek-V3 model free of charge. The program, called DeepSeek-R1, has incited plenty of concern: Ultrapowerful Chinese AI fashions are precisely what many leaders of American AI companies feared after they, and extra recently President Donald Trump, have sounded alarms a few technological race between the United States and the People’s Republic of China. This bias is usually a mirrored image of human biases present in the information used to train AI models, and researchers have put much effort into "AI alignment," the strategy of making an attempt to remove bias and align AI responses with human intent. What's interesting about the ChatGPT outage is that it is exposed how many people have already come to depend on the AI chatbot for both work and play, in a not dissimilar sense to engines like google and social media. Google is reportedly racing to adapt Search and possibly other products to ChatGPT. ChatGPT reached 1 million customers 5 days after its launch. 2024 has additionally been the yr the place we see Mixture-of-Experts models come again into the mainstream once more, particularly because of the rumor that the original GPT-4 was 8x220B consultants.

When you loved this information along with you desire to acquire details with regards to ديب سيك i implore you to check out our own web-site.

- 이전글여행의 세계: 먼 곳에서 찾은 경험들 25.02.06

- 다음글How To Earn $1,000,000 Using Casinobonusadviser.com 25.02.06

댓글목록

등록된 댓글이 없습니다.